Un.Tech Island 1.54K subscribers Subscribe 8.2K views 4 years ago Using spark snowflake connector, this sample program will read/write the data from snowflake using snowflake-spark connector.The Snowflake Connector for Spark enables using Snowflake as an Apache Spark data source, similar to other data sources (PostgreSQL, HDFS, S3, etc.).

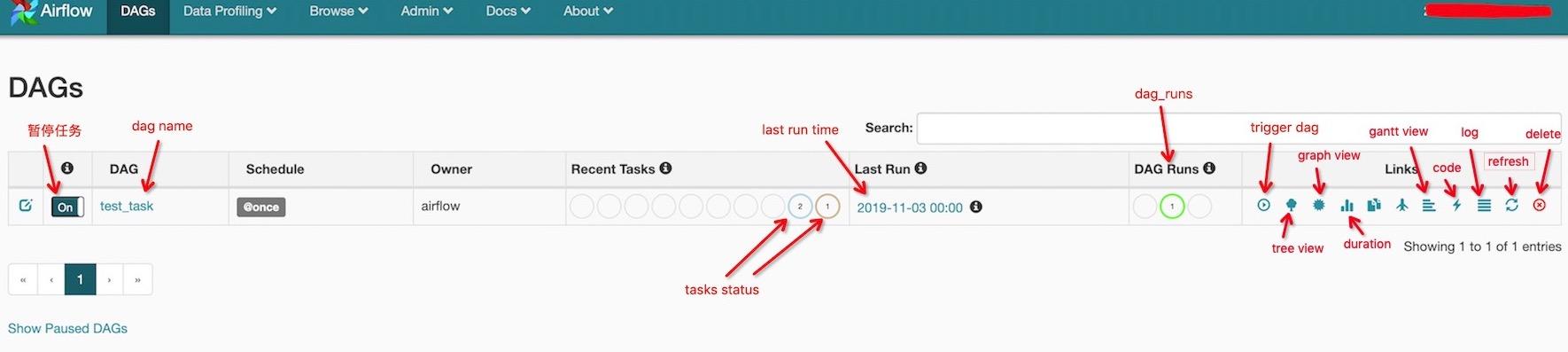

TRIGGER AIRFLOW DAG VIA API HOW TO

Most of the examples online show how to set up a connection using a regular string password, but the way my company has set up their password is via private key.

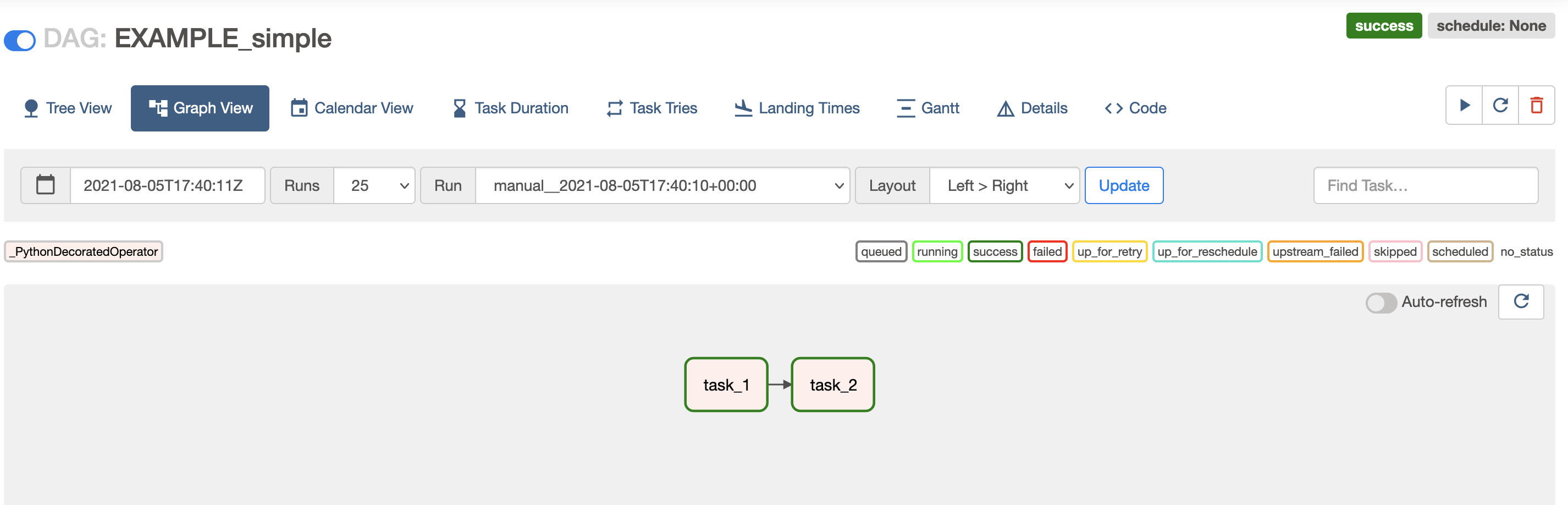

Example – snowflake-jdbc-connector.I am looking to create an ETL process that reads queries from Snowflake. Enter a name in the field marked “Name.”. Enter the S3 location where you uploaded the Snowflake JDBC connector JAR file for the Connector S3 URL field. Use ‘-‘ to print to stderr.Select Create custom connector from the Connectors drop-down menu on the AWS Glue Studio console. The logfile to store the webserver error log. The logfile to store the webserver access log. Set the hostname on which to run the web serverĭaemonize instead of running in the foreground The timeout for waiting on webserver workers Possible choices: sync, eventlet, gevent, tornado Number of workers to run the webserver on If set, the backfill will auto-rerun all the failed tasks for the backfill date range instead of throwing exceptions If set, the backfill will delete existing backfill-related DAG runs and start anew with fresh, running DAG runs JSON string that gets pickled into the DagRun’s conf attribute Ignores depends_on_past dependencies for the first set of tasks only (subsequent executions in the backfill DO respect depends_on_past).Īmount of time in seconds to wait when the limit on maximum active dag runs (max_active_runs) has been reached before trying to execute a dag run again. Only works in conjunction with task_regex

Skip upstream tasks, run only the tasks matching the regexp. The regex to filter specific task_ids to backfill (optional)ĭo not attempt to pickle the DAG object to send over to the workers, just tell the workers to run their version of the code. Serialized pickle object of the entire dag (used internally)ĭo not capture standard output and error streams (useful for interactive debugging) Pickles (serializes) the DAG and ships it to the worker Ignore depends_on_past dependencies (but respect upstream dependencies) upstream, depends_on_past, and retry delay dependencies Ignores all non-critical dependencies, including ignore_ti_state and ignore_task_deps Path to config file to use instead of airflow.cfg Ignore previous task instance state, rerun regardless if task already succeeded/failed Mark jobs as succeeded without running them Defaults to ‘/dags’ where is the value you set for ‘AIRFLOW_HOME’ config you set in ‘airflow.cfg’ File location or directory from which to look for the dag.

0 kommentar(er)

0 kommentar(er)